2: IceNet Pipeline usage#

Context#

Purpose#

The first notebook demonstrated the use of high level command-line interfaces (CLI) of the IceNet library to download, process, train and predict from end to end.

Now that you have gone through the basic steps of running the IceNet model via the CLI, you may wish to establish a framework to run the model automatically for end-to-end runs. This is often called a Pipeline. A Pipeline can schedule ongoing model runs or run multiple model variations simultaneously.

This notebook illustrates the use of helper scripts from the IceNet pipeline repository for testing and producing operational forecasts.

Please do go through the first notebook before proceeding with this, as the data download exists outside of the pipeline, and this is covered in detail in the first notebook. However, even so, this notebook has been designed to be run independent of other notebooks in this repository.

This demonstrator notebook has been run on the British Antarctic Survey in-house HPC, however, the pipeline is by no means limited to running solely on HPCs.

Highlights#

The key features of an end to end run are:

Note: Steps 3, 4 and 5 are within the IceNet pipeline.

Contributions#

Notebook#

James Byrne (author)

Bryn Noel Ubald (co-author)

Matthew Gascoyne (co-author)

Please raise issues in this repository to suggest updates to this notebook!

Contact me at jambyr <at> bas.ac.uk for anything else…

Modelling codebase#

James Byrne (code author), Bryn Noel Ubald (code author), Tom Andersson (science author)

Modelling publications#

Andersson, T.R., Hosking, J.S., Pérez-Ortiz, M. et al. Seasonal Arctic sea ice forecasting with probabilistic deep learning. Nat Commun 12, 5124 (2021). https://doi.org/10.1038/s41467-021-25257-4

Involved organisations#

The Alan Turing Institute and British Antarctic Survey

1. Introduction#

CLI vs Library vs Pipeline usage#

The IceNet package is designed to support automated runs from end to end by exposing the CLI operations demonstrated in the first notebook. These are simple wrappers around the library itself, and any step of this can be undertaken manually or programmatically by inspecting the relevant endpoints.

IceNet can be run in a number of ways: from the command line, the python interface, or as a pipeline.

The rule of thumb to follow:

Use the pipeline repository if you want to run the end to end IceNet processing out of the box.

Adapt or customise this process using

icenet_*commands described in this notebook and in the scripts contained in the pipeline repo.For ultimate customisation, you can interact with the IceNet repository programmatically (which is how the CLI commands operate.) For more information look at the IceNet CLI implementations and the library notebook, along with the library documentation.

Using the Pipeline#

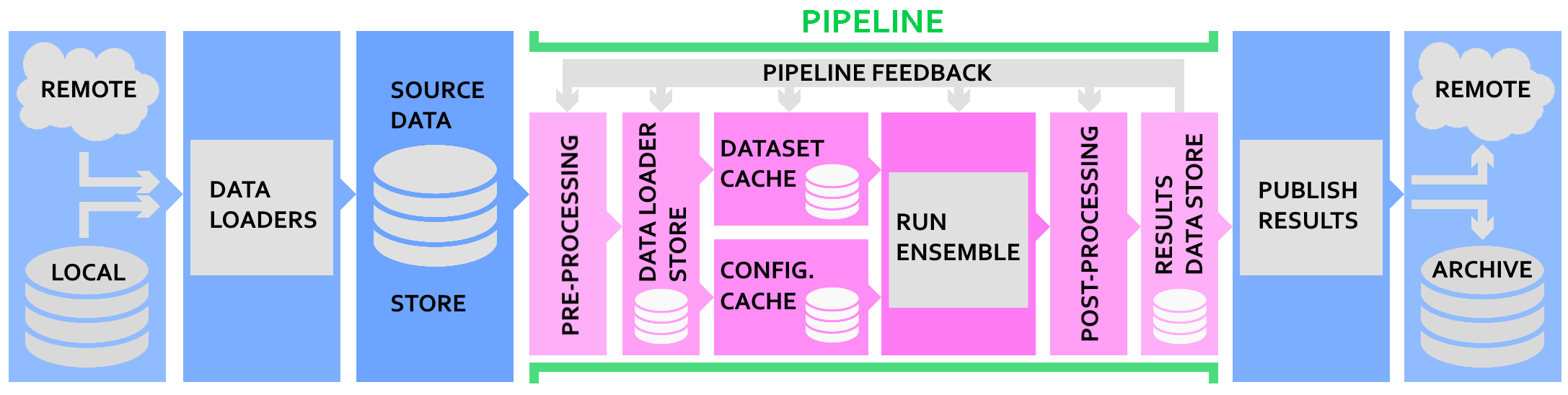

Now that you have gone through the basic steps of running the IceNet model via the high-level CLI commands, you may wish to establish a framework to run the model automatically for end-to-end runs. This is often called a Pipeline. A Pipeline can schedule ongoing model runs or run multiple model variations simultaneously. The pipeline is driven by a series of bash scripts, and an environmental ENVS configuration file.

To automatically produce daily IceNet forecasts we train multiple variations of the model, each with different starting conditions. We call this ensemble training. Then we run predictions for each model variation, producing a mean and error across the whole model ensemble. This captures some of the model uncertainty.

Data#

This assumes that you have a data store in a data/ folder (This can be the same as the data/ directory generated when running through the first notebook). Since the data is common across pipelines, you do not need to redownload data that you have previously downloaded. It is recommended to symbolically link to a data store such that data is only downloaded when has not been downloaded previously.

Ensemble Running#

To do this, an icenet-pipeline repository is available. The icenet-pipeline offers the run_train_ensemble.sh and run_predict_ensemble.sh script which operates similarly to the icenet_train and icenet_predict CLI commands demonstrated in the first notebook from the IceNet library.

2. Setup#

Get the IceNet Pipeline#

Before progressing you will need to clone the icenet-pipeline repository. Assuming you have followed the directory structure from the first notebook:

git clone https://www.github.com/icenet-ai/icenet-pipeline.git green

ln -s green notebook-pipeline

cd icenet-notebooks

We clone a ‘fresh’ pipeline repository into a directory called ‘green’ (as an arbitrary way of identifying the fresh pipeline) and then symbolically link to it. This allows us to symbolically swap to another pipeline later if we want to.

my-icenet-project/ <--- we're in here!

├── data/

├── icenet-notebooks/

├── green/ <--- Clone of icenet-pipeline

└── notebook-pipeline@ <--- Symlink to the green/ `icenet-pipeline` repo we've just cloned into

# Viewing symbolically linked files.

!find .. -maxdepth 1 -type l -ls

139662625249 0 lrwxrwxrwx 1 bryald ailab 5 Feb 18 15:22 ../notebook-pipeline -> green

Configure the Pipeline#

Move into the notebook-pipeline directory.

import os

os.chdir("../notebook-pipeline")

!pwd

/data/hpcdata/users/bryald/git/icenet/icenet/green

The pipeline is driven by environmental variables that are defined within an ENVS file.

There is an example ENVS file (ENVS.example) in the ../notebook-pipeline directory which is what ENVS is symbolically linked to by default.

You can copy the ENVS.example file and create many variations to cover your usage scenario. Then, update the ENVS file symbolic link to the run you would like to go through.

As a demonstrator, we will change the existing my-icenet-project/notebook-pipeline/ENVS link that points to my-icenet-project/notebook-pipeline/ENVS.example.

We will instead point it to the example in this notebook repository after copying it over my-icenet-project/icenet-notebooks/ENVS.notebook_tutorial.

The ENVS files are typically collated within the notebook-pipeline repo, hence why we link the ENVS.notebook_tutorial in this repository to ENVS in the notebook-pipeline repository.

# Unlink the existing symoblic link (under `my-icenet-project/notebook-pipeline/ENVS`)

!unlink ENVS

# Point to the ENVS file from the icenet-notebooks repository (where this notebook is)

!ln -s ../icenet-notebooks/ENVS.notebook_tutorial ENVS

Before running through this notebook, please export the following variables to point to your icenet conda environment (if different to the default found in the ENVS file), as an example:

export ICENET_HOME=${HOME}/icenet/icenetv0.2.9

export ICENET_CONDA=${HOME}/conda-envs/icenet

This will overwrite the defaults within the ENVS file, and will be used by the pipeline.

# Looking at the symlinked files in the `notebook-pipeline` directory

!find . -maxdepth 1 -type l -ls

352250552559 0 lrwxrwxrwx 1 bryald ailab 7 Feb 18 15:33 ./data -> ../data

352248255940 0 lrwxrwxrwx 1 bryald ailab 42 Feb 18 15:40 ./ENVS -> ../icenet-notebooks/ENVS.notebook_tutorial

Download data before initiating pipeline#

As shown in the pipeline image at the top, the source data download is external to the pipeline since it is common across pipelines.

Hence, the same commands from the first notebook can be used to download the required data into a data store (if not previously downloaded) and symbolically linked into in the working directory before using the pipeline. Please check the first notebook for details regarding the usage of these commands.

Please note that you do not need to redownload data you have already downloaded previously (i.e., for date ranges you have previously downloaded into your data store).

Assuming you’ve run the first notebook, you’ve already downloaded the necessary data to the ../data directory, so, we can now symbolically link the data into our working directory. The code will skip over any previously downloaded date ranges. You can link to the datastore by running ln -s ../data.

!icenet_data_masks south

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_01.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_02.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_03.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_04.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_05.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_06.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_07.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_08.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_09.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_10.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_11.npy, already exists

[18-02-25 15:40:45 :INFO ] - Skipping ./data/masks/south/masks/active_grid_cell_mask_12.npy, already exists

!icenet_data_era5 south --vars uas,vas,tas,zg --levels ',,,500|250' 2020-1-1 2020-4-30

[18-02-25 15:40:48 :INFO ] - ERA5 Data Downloading

2025-02-18 15:40:48,730 INFO [2024-09-26T00:00:00] Watch our [Forum](https://forum.ecmwf.int/) for Announcements, news and other discussed topics.

[18-02-25 15:40:48 :INFO ] - [2024-09-26T00:00:00] Watch our [Forum](https://forum.ecmwf.int/) for Announcements, news and other discussed topics.

2025-02-18 15:40:48,731 WARNING [2024-06-16T00:00:00] CDS API syntax is changed and some keys or parameter names may have also changed. To avoid requests failing, please use the "Show API request code" tool on the dataset Download Form to check you are using the correct syntax for your API request.

[18-02-25 15:40:48 :WARNING ] - [2024-06-16T00:00:00] CDS API syntax is changed and some keys or parameter names may have also changed. To avoid requests failing, please use the "Show API request code" tool on the dataset Download Form to check you are using the correct syntax for your API request.

[18-02-25 15:40:48 :INFO ] - Building request(s), downloading and daily averaging from ERA5 API

[18-02-25 15:40:48 :INFO ] - Processing single download for uas @ None with 121 dates

[18-02-25 15:40:48 :INFO ] - Processing single download for vas @ None with 121 dates

[18-02-25 15:40:48 :INFO ] - Processing single download for tas @ None with 121 dates

[18-02-25 15:40:48 :INFO ] - Processing single download for zg @ 500 with 121 dates

[18-02-25 15:40:48 :INFO ] - Processing single download for zg @ 250 with 121 dates

[18-02-25 15:40:49 :INFO ] - No requested dates remain, likely already present

[18-02-25 15:40:49 :INFO ] - No requested dates remain, likely already present

[18-02-25 15:40:49 :INFO ] - No requested dates remain, likely already present

[18-02-25 15:40:49 :INFO ] - No requested dates remain, likely already present

[18-02-25 15:40:49 :INFO ] - No requested dates remain, likely already present

[18-02-25 15:40:49 :INFO ] - 0 daily files downloaded

[18-02-25 15:40:49 :INFO ] - No regrid batches to processing, moving on...

[18-02-25 15:40:49 :INFO ] - Rotating wind data prior to merging

[18-02-25 15:40:50 :INFO ] - Rotating wind data in ./data/era5/south/uas ./data/era5/south/vas

[18-02-25 15:40:50 :INFO ] - 0 files for uas

[18-02-25 15:40:50 :INFO ] - 0 files for vas

Note: We also make sure to also download sea-ice concentration data for the time period we’re predicting for (in addition to the training range).

In this case, the ENVS file defines the latest train date as being 2020-3-31, and the latest test date being 2020-4-2. Since we would like to forecast for 7 days (Also defined within the ENVS file under export FORECAST_DAYS=7), we should download up to 7 days after the end dates of train/validation/test.

This will also be of use when comparing the prediction data.

These do not have to be downloaded in separate date ranges, you can cover the entire period in one go (2019-12-29 2020-4-30), or use (e.g. 2019-12-29,2020-4-3 2020-3-31,2020-4-23) syntax. The download is split into multiple sections to also demonstrate that previously downloaded data will be skipped over. This is the same for the above ERA5 download.

# Date range for training (Adding 7 days forecast period to end date)

!icenet_data_sic south -d 2020-1-1 2020-4-7

# Date range for validation (Adding 7 days forecast period to end date)

!icenet_data_sic south -d 2020-4-3 2020-4-30

# Date range for test (Adding 7 days forecast period to end date)

# Note: Above date range already covers this, so this data will not be re-downloaded.

!icenet_data_sic south -d 2020-4-1 2020-4-9

[18-02-25 15:40:54 :INFO ] - OSASIF-SIC Data Downloading

[18-02-25 15:40:54 :INFO ] - Downloading SIC datafiles to .temp intermediates...

[18-02-25 15:40:55 :INFO ] - Excluding 121 dates already existing from 98 dates requested.

[18-02-25 15:40:55 :INFO ] - Opening for interpolation: ['./data/osisaf/south/siconca/2020.nc']

[18-02-25 15:40:55 :INFO ] - Processing 0 missing dates

[18-02-25 15:40:56 :INFO ] - OSASIF-SIC Data Downloading

[18-02-25 15:40:56 :INFO ] - Downloading SIC datafiles to .temp intermediates...

[18-02-25 15:40:57 :INFO ] - Excluding 121 dates already existing from 28 dates requested.

[18-02-25 15:40:57 :INFO ] - Opening for interpolation: ['./data/osisaf/south/siconca/2020.nc']

[18-02-25 15:40:57 :INFO ] - Processing 0 missing dates

[18-02-25 15:40:58 :INFO ] - OSASIF-SIC Data Downloading

[18-02-25 15:40:58 :INFO ] - Downloading SIC datafiles to .temp intermediates...

[18-02-25 15:40:59 :INFO ] - Excluding 121 dates already existing from 9 dates requested.

[18-02-25 15:40:59 :INFO ] - Opening for interpolation: ['./data/osisaf/south/siconca/2020.nc']

[18-02-25 15:40:59 :INFO ] - Processing 0 missing dates

3. Process#

The following command processes the downloaded data for the dates defined in the ENVS file.

This is equivalent to running icenet_process_era5, icenet_process_ora5, icenet_process_sic, icenet_process_metadata commands from the IceNet library (as demonstrated in the first notebook).

The arguments passed to these commands are obtained from the PROC_ARGS_* variables in the ENVS file.

And, the dates that are processed are defined by the following variables in the ENVS file:

TRAIN_START_*TRAIN_END_*VAL_START_*VAL_END_*TEST_START_*TEST_END_*

This only needs to be run once unless the above variables need to be changed. Hence, it can be run as a precursor to the pipeline if the processed data does not need to change.

!./run_data.sh south

CondaError: Run 'conda init' before 'conda activate'

[18-02-25 15:41:13 :INFO ] - Got 91 dates for train

[18-02-25 15:41:13 :INFO ] - Got 21 dates for val

[18-02-25 15:41:13 :INFO ] - Got 2 dates for test

[18-02-25 15:41:13 :INFO ] - Creating path: ./processed/tutorial_pipeline_south/era5

[18-02-25 15:41:13 :DEBUG ] - Setting range for linear trend steps based on 7

[18-02-25 15:41:13 :INFO ] - Processing 91 dates for train category

[18-02-25 15:41:13 :INFO ] - Including lag of 1 days

[18-02-25 15:41:13 :INFO ] - Including lead of 93 days

[18-02-25 15:41:13 :DEBUG ] - Globbing train from ./data/era5/south/**/[12]*.nc

[18-02-25 15:41:13 :DEBUG ] - Globbed 376 files

[18-02-25 15:41:13 :DEBUG ] - Create structure of 376 files

[18-02-25 15:41:13 :INFO ] - Processing 21 dates for val category

[18-02-25 15:41:13 :INFO ] - Including lag of 1 days

[18-02-25 15:41:13 :INFO ] - Including lead of 93 days

[18-02-25 15:41:13 :DEBUG ] - Globbing val from ./data/era5/south/**/[12]*.nc

[18-02-25 15:41:13 :DEBUG ] - Globbed 376 files

[18-02-25 15:41:13 :DEBUG ] - Create structure of 376 files

[18-02-25 15:41:13 :INFO ] - Processing 2 dates for test category

[18-02-25 15:41:13 :INFO ] - Including lag of 1 days

[18-02-25 15:41:13 :INFO ] - Including lead of 93 days

[18-02-25 15:41:13 :DEBUG ] - Globbing test from ./data/era5/south/**/[12]*.nc

[18-02-25 15:41:13 :DEBUG ] - Globbed 376 files

[18-02-25 15:41:13 :DEBUG ] - Create structure of 376 files

[18-02-25 15:41:13 :INFO ] - Got 2 files for psl

[18-02-25 15:41:13 :INFO ] - Got 2 files for ta500

[18-02-25 15:41:13 :INFO ] - Got 2 files for tas

[18-02-25 15:41:13 :INFO ] - Got 2 files for tos

[18-02-25 15:41:13 :INFO ] - Got 2 files for uas

[18-02-25 15:41:13 :INFO ] - Got 2 files for vas

[18-02-25 15:41:13 :INFO ] - Got 2 files for zg250

[18-02-25 15:41:13 :INFO ] - Got 2 files for zg500

[18-02-25 15:41:13 :INFO ] - Opening files for uas

[18-02-25 15:41:13 :DEBUG ] - Files: ['./data/era5/south/uas/2019.nc', './data/era5/south/uas/2020.nc']

[18-02-25 15:41:13 :DEBUG ] - eccodes lib search: trying to find binary wheel

[18-02-25 15:41:13 :DEBUG ] - eccodes lib search: looking in /data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/eccodes.libs

[18-02-25 15:41:13 :DEBUG ] - eccodes lib search: returning wheel library from /data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/eccodes.libs/libeccodes-35258663.so

[18-02-25 15:41:13 :DEBUG ] - eccodes lib search: versions: {'eccodes': '2.38.3'}

[18-02-25 15:41:14 :DEBUG ] - GDAL data found in package: path='/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/rasterio/gdal_data'.

[18-02-25 15:41:14 :DEBUG ] - PROJ data found in package: path='/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/rasterio/proj_data'.

[18-02-25 15:41:14 :DEBUG ] - Files have var names uas which will be renamed to uas

[18-02-25 15:41:14 :DEBUG ] - 731 dates in da

[18-02-25 15:41:14 :INFO ] - Filtered to 731 units long based on configuration requirements

[18-02-25 15:41:16 :DEBUG ] - No pre normalisation implemented for uas

[18-02-25 15:41:16 :INFO ] - Normalising uas

[18-02-25 15:41:16 :DEBUG ] - Generating norm-scaling min-max from 91 training dates

[18-02-25 15:41:17 :DEBUG ] - No post normalisation implemented for uas

[18-02-25 15:41:18 :DEBUG ] - Adding uas file: ./processed/tutorial_pipeline_south/era5/south/uas/uas_abs.nc

[18-02-25 15:41:18 :INFO ] - Opening files for vas

[18-02-25 15:41:18 :DEBUG ] - Files: ['./data/era5/south/vas/2019.nc', './data/era5/south/vas/2020.nc']

[18-02-25 15:41:18 :DEBUG ] - Files have var names vas which will be renamed to vas

[18-02-25 15:41:18 :DEBUG ] - 731 dates in da

[18-02-25 15:41:18 :INFO ] - Filtered to 731 units long based on configuration requirements

[18-02-25 15:41:20 :DEBUG ] - No pre normalisation implemented for vas

[18-02-25 15:41:20 :INFO ] - Normalising vas

[18-02-25 15:41:20 :DEBUG ] - Generating norm-scaling min-max from 91 training dates

[18-02-25 15:41:21 :DEBUG ] - No post normalisation implemented for vas

[18-02-25 15:41:21 :DEBUG ] - Adding vas file: ./processed/tutorial_pipeline_south/era5/south/vas/vas_abs.nc

[18-02-25 15:41:21 :INFO ] - Opening files for zg500

[18-02-25 15:41:21 :DEBUG ] - Files: ['./data/era5/south/zg500/2019.nc', './data/era5/south/zg500/2020.nc']

[18-02-25 15:41:21 :DEBUG ] - Files have var names zg500 which will be renamed to zg500

[18-02-25 15:41:21 :DEBUG ] - 731 dates in da

[18-02-25 15:41:21 :INFO ] - Filtered to 731 units long based on configuration requirements

[18-02-25 15:41:21 :INFO ] - Generating climatology ./processed/tutorial_pipeline_south/era5/south/params/climatology.zg500

[18-02-25 15:41:22 :WARNING ] - We don't have a full climatology (1,2,3) compared with data (1,2,3,4,5,6,7,8,9,10,11,12)

[18-02-25 15:41:23 :DEBUG ] - No pre normalisation implemented for zg500

[18-02-25 15:41:23 :INFO ] - Normalising zg500

[18-02-25 15:41:23 :DEBUG ] - Generating norm-scaling min-max from 91 training dates

[18-02-25 15:41:24 :DEBUG ] - No post normalisation implemented for zg500

[18-02-25 15:41:24 :DEBUG ] - Adding zg500 file: ./processed/tutorial_pipeline_south/era5/south/zg500/zg500_anom.nc

[18-02-25 15:41:24 :INFO ] - Opening files for zg250

[18-02-25 15:41:24 :DEBUG ] - Files: ['./data/era5/south/zg250/2019.nc', './data/era5/south/zg250/2020.nc']

[18-02-25 15:41:24 :DEBUG ] - Files have var names zg250 which will be renamed to zg250

[18-02-25 15:41:24 :DEBUG ] - 731 dates in da

[18-02-25 15:41:24 :INFO ] - Filtered to 731 units long based on configuration requirements

[18-02-25 15:41:24 :INFO ] - Generating climatology ./processed/tutorial_pipeline_south/era5/south/params/climatology.zg250

[18-02-25 15:41:25 :WARNING ] - We don't have a full climatology (1,2,3) compared with data (1,2,3,4,5,6,7,8,9,10,11,12)

[18-02-25 15:41:26 :DEBUG ] - No pre normalisation implemented for zg250

[18-02-25 15:41:26 :INFO ] - Normalising zg250

[18-02-25 15:41:26 :DEBUG ] - Generating norm-scaling min-max from 91 training dates

[18-02-25 15:41:27 :DEBUG ] - No post normalisation implemented for zg250

[18-02-25 15:41:28 :DEBUG ] - Adding zg250 file: ./processed/tutorial_pipeline_south/era5/south/zg250/zg250_anom.nc

[18-02-25 15:41:28 :INFO ] - Writing configuration to ./loader.tutorial_pipeline_south.json

[18-02-25 15:41:32 :INFO ] - Got 91 dates for train

[18-02-25 15:41:32 :INFO ] - Got 21 dates for val

[18-02-25 15:41:32 :INFO ] - Got 2 dates for test

[18-02-25 15:41:32 :INFO ] - Creating path: ./processed/tutorial_pipeline_south/osisaf

[18-02-25 15:41:32 :DEBUG ] - Setting range for linear trend steps based on 7

[18-02-25 15:41:32 :INFO ] - Processing 91 dates for train category

[18-02-25 15:41:32 :INFO ] - Including lag of 1 days

[18-02-25 15:41:32 :INFO ] - Including lead of 93 days

[18-02-25 15:41:32 :DEBUG ] - Globbing train from ./data/osisaf/south/**/[12]*.nc

[18-02-25 15:41:32 :DEBUG ] - Globbed 1 files

[18-02-25 15:41:32 :DEBUG ] - Create structure of 1 files

[18-02-25 15:41:32 :INFO ] - No data found for 2019-12-31, outside data boundary perhaps?

[18-02-25 15:41:32 :INFO ] - Processing 21 dates for val category

[18-02-25 15:41:32 :INFO ] - Including lag of 1 days

[18-02-25 15:41:32 :INFO ] - Including lead of 93 days

[18-02-25 15:41:32 :DEBUG ] - Globbing val from ./data/osisaf/south/**/[12]*.nc

[18-02-25 15:41:32 :DEBUG ] - Globbed 1 files

[18-02-25 15:41:32 :DEBUG ] - Create structure of 1 files

[18-02-25 15:41:32 :INFO ] - Processing 2 dates for test category

[18-02-25 15:41:32 :INFO ] - Including lag of 1 days

[18-02-25 15:41:32 :INFO ] - Including lead of 93 days

[18-02-25 15:41:32 :DEBUG ] - Globbing test from ./data/osisaf/south/**/[12]*.nc

[18-02-25 15:41:32 :DEBUG ] - Globbed 1 files

[18-02-25 15:41:32 :DEBUG ] - Create structure of 1 files

[18-02-25 15:41:32 :INFO ] - Got 1 files for siconca

[18-02-25 15:41:32 :INFO ] - Opening files for siconca

[18-02-25 15:41:32 :DEBUG ] - Files: ['./data/osisaf/south/siconca/2020.nc']

[18-02-25 15:41:32 :DEBUG ] - eccodes lib search: trying to find binary wheel

[18-02-25 15:41:32 :DEBUG ] - eccodes lib search: looking in /data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/eccodes.libs

[18-02-25 15:41:32 :DEBUG ] - eccodes lib search: returning wheel library from /data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/eccodes.libs/libeccodes-35258663.so

[18-02-25 15:41:32 :DEBUG ] - eccodes lib search: versions: {'eccodes': '2.38.3'}

[18-02-25 15:41:32 :DEBUG ] - GDAL data found in package: path='/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/rasterio/gdal_data'.

[18-02-25 15:41:32 :DEBUG ] - PROJ data found in package: path='/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/rasterio/proj_data'.

[18-02-25 15:41:33 :DEBUG ] - Files have var names ice_conc which will be renamed to siconca

[18-02-25 15:41:33 :DEBUG ] - 121 dates in da

[18-02-25 15:41:33 :INFO ] - Filtered to 121 units long based on configuration requirements

[18-02-25 15:41:33 :DEBUG ] - ./data/masks already exists

[18-02-25 15:41:33 :INFO ] - Generating trend data up to 7 steps ahead for 121 dates

[18-02-25 15:41:33 :INFO ] - Generating 127 trend dates

[18-02-25 15:41:34 :DEBUG ] - ./data/masks already exists

[18-02-25 15:41:38 :INFO ] - Writing new trend cache for siconca

[18-02-25 15:41:38 :DEBUG ] - Adding siconca file: ./processed/tutorial_pipeline_south/osisaf/south/siconca/siconca_linear_trend.nc

[18-02-25 15:41:38 :INFO ] - No normalisation for siconca

[18-02-25 15:41:38 :DEBUG ] - No post normalisation implemented for siconca

[18-02-25 15:41:38 :DEBUG ] - Adding siconca file: ./processed/tutorial_pipeline_south/osisaf/south/siconca/siconca_abs.nc

[18-02-25 15:41:38 :INFO ] - Loading configuration ./loader.tutorial_pipeline_south.json

[18-02-25 15:41:38 :INFO ] - Writing configuration to ./loader.tutorial_pipeline_south.json

[18-02-25 15:41:41 :INFO ] - Creating path: ./processed/tutorial_pipeline_south/meta

[18-02-25 15:41:42 :INFO ] - Loading configuration ./loader.tutorial_pipeline_south.json

[18-02-25 15:41:42 :INFO ] - Writing configuration to ./loader.tutorial_pipeline_south.json

[18-02-25 15:41:45 :INFO ] - Got 0 dates for train

[18-02-25 15:41:45 :INFO ] - Got 0 dates for val

[18-02-25 15:41:45 :INFO ] - Got 0 dates for test

[18-02-25 15:41:45 :INFO ] - Creating path: ./network_datasets/tutorial_pipeline_south

[18-02-25 15:41:45 :INFO ] - Loading configuration loader.tutorial_pipeline_south.json

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to uas_abs channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to vas_abs channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to siconca_abs channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to zg250_anom channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to zg500_anom channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to siconca_linear_trend channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to cos channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to land channel

[18-02-25 15:41:45 :DEBUG ] - Adding 1 to sin channel

[18-02-25 15:41:45 :DEBUG ] - Channel quantities deduced:

{'cos': 1,

'land': 1,

'siconca_abs': 1,

'siconca_linear_trend': 7,

'sin': 1,

'uas_abs': 1,

'vas_abs': 1,

'zg250_anom': 1,

'zg500_anom': 1}

Total channels: 15

[18-02-25 15:41:45 :DEBUG ] - ./data/masks already exists

[18-02-25 15:41:45 :DEBUG ] - Using selector: EpollSelector

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/node.py:187: UserWarning: Port 8888 is already in use.

Perhaps you already have a cluster running?

Hosting the HTTP server on port 44768 instead

warnings.warn(

[18-02-25 15:41:46 :INFO ] - Dashboard at localhost:8888

[18-02-25 15:41:46 :INFO ] - Using dask client <Client: 'tcp://127.0.0.1:45212' processes=8 threads=8, memory=503.20 GiB>

[18-02-25 15:41:47 :INFO ] - 91 train dates to process, generating cache data.

2025-02-18 15:41:50,850 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 13, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 13, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 13, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 13, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 13, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:41:57,926 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 12, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 12, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 12, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 12, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 12, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:41:59,423 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 22, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 22, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 22, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 22, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 22, 0, 0)}

2025-02-18 15:42:00,057 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 4, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 4, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 4, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 4, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 4, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:00,340 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('astype-ac07afc5678ef766d38010ceed63954d', 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: Alias(('astype-ac07afc5678ef766d38010ceed63954d', 0, 0)->('array-getitem-astype-ac07afc5678ef766d38010ceed63954d', 0, 0))

new run_spec: Alias(('astype-ac07afc5678ef766d38010ceed63954d', 0, 0)->('getitem-astype-ac07afc5678ef766d38010ceed63954d', 0, 0))

old dependencies: {('array-getitem-astype-ac07afc5678ef766d38010ceed63954d', 0, 0)}

new dependencies: {('getitem-astype-ac07afc5678ef766d38010ceed63954d', 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:01,119 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 16, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 16, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 16, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 16, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 16, 0, 0)}

2025-02-18 15:42:01,207 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 18, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 18, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 18, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 18, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 18, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000000.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000001.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000002.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000003.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000004.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000005.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000006.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000007.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000008.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000009.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000010.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000011.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000012.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000013.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000014.tfrecord

[18-02-25 15:42:10 :INFO ] - Finished output ./network_datasets/tutorial_pipeline_south/south/train/00000015.tfrecord

2025-02-18 15:42:11,735 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 39, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 39, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 39, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 39, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 39, 0, 0)}

2025-02-18 15:42:11,774 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 35, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 35, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 35, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 35, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 35, 0, 0)}

2025-02-18 15:42:11,958 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 53, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 53, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 53, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 53, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 53, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:13,760 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('astype-8edca25275ac5fe21814770f6825e20b', 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: processing

old run_spec: Alias(('astype-8edca25275ac5fe21814770f6825e20b', 0, 0)->('array-getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0))

new run_spec: Alias(('astype-8edca25275ac5fe21814770f6825e20b', 0, 0)->('getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0))

old dependencies: {('array-getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0)}

new dependencies: {('getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:14,532 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: Alias(('astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)->('getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0))

new run_spec: Alias(('astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)->('array-getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0))

old dependencies: {('getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)}

new dependencies: {('array-getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:14,669 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: processing

old run_spec: Alias(('astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)->('getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0))

new run_spec: Alias(('astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)->('array-getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0))

old dependencies: {('getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)}

new dependencies: {('array-getitem-astype-bbd1124cd4ebdf229756b011d5b455c2', 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:16,029 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('astype-8edca25275ac5fe21814770f6825e20b', 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: Alias(('astype-8edca25275ac5fe21814770f6825e20b', 0, 0)->('array-getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0))

new run_spec: Alias(('astype-8edca25275ac5fe21814770f6825e20b', 0, 0)->('getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0))

old dependencies: {('array-getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0)}

new dependencies: {('getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0)}

2025-02-18 15:42:17,322 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 36, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 36, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 36, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 36, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 36, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:18,711 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 52, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 52, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 52, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 52, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 52, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:20,143 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 58, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 58, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 58, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 58, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 58, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:21,048 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('astype-8edca25275ac5fe21814770f6825e20b', 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: Alias(('astype-8edca25275ac5fe21814770f6825e20b', 0, 0)->('array-getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0))

new run_spec: Alias(('astype-8edca25275ac5fe21814770f6825e20b', 0, 0)->('getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0))

old dependencies: {('array-getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0)}

new dependencies: {('getitem-astype-8edca25275ac5fe21814770f6825e20b', 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

2025-02-18 15:42:21,538 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 42, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 42, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 42, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 42, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 42, 0, 0)}

2025-02-18 15:42:21,644 - distributed.scheduler - WARNING - Detected different `run_spec` for key ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 38, 0, 0) between two consecutive calls to `update_graph`. This can cause failures and deadlocks down the line. Please ensure unique key names. If you are using a standard dask collections, consider releasing all the data before resubmitting another computation. More details and help can be found at https://github.com/dask/dask/issues/9888.

Debugging information

---------------------

old task state: released

old run_spec: <Task ('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 38, 0, 0) getter(...)>

new run_spec: Alias(('open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 38, 0, 0)->('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 38, 0, 0))

old dependencies: {'original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac'}

new dependencies: {('original-open_dataset-siconca_abs-91ef2c2db1f71f7aeb21e08939b695ac', 38, 0, 0)}

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(

/data/hpcdata/users/bryald/miniconda3/envs/icenet0.2.9_dev/lib/python3.11/site-packages/distributed/client.py:3370: UserWarning: Sending large graph of size 22.10 MiB.

This may cause some slowdown.

Consider loading the data with Dask directly

or using futures or delayed objects to embed the data into the graph without repetition.

See also https://docs.dask.org/en/stable/best-practices.html#load-data-with-dask for more information.

warnings.warn(